We had Maria Camanes join us to share her favourite tips for crawling eCommcere sites with seemingly endless pages. You can access the slides from her talk here, enjoy her summary of her key points!

But first let’s have a look at some of the most common problems eCommerce sites have to face nowadays:

Common Problems crawling large eCommerce sites:

A missing or wrongly implemented product retirement strategy can – and will – have a negative impact on any ecommerce site’s organic performance:

First of all, discontinued or temporarily unavailable products can result in large quantities of 404s, broken links and empty category pages (thin content), which are bad for a number of reasons: displaying a 404 or empty page to your customers will result in bad UX but also on large quantities of link equity being lost.

Furthermore, thin category pages (with limited stock) are also font of bad UX, lost sales and they put the site at risk of algorithm penalties.

For this reason, my top tips on how to crawl large ecommerce sites will focus on ‘How to find out of stock products as well as thin category pages and – as these often occur in large quantities on ecommerce sites – how to deal with them at scale’:

Tip 1: Find out of stock products with Screaming Frog’s ‘Custom search’

Out of stock products that return a 200 status code will be difficult to find at scale, since they will not be picked up via a standard crawl.

However, you can run a crawl using Screaming Frog’s ‘Custom search’ feature to find all the product pages within your site that contain a specific “out of stock’ string.

You just need to find the ‘Out of stock’ identifier in the HTML of your product page template and paste it in the search function:

You can have a look at this article where I run you through the whole process in more details.

Tip 2: Find all your empty category pages

You can apply this process to all the category pages on your site in order to find product listing pages that don’t have any products listed:

The process works in the same way as the one I explained above, but this time, instead of an ‘Out of stock’ identifier (such as ‘product out of stock’ or ‘currently unavailable’) you’ll have to find a ‘no products’ identifier within the HTML of your category page template and paste it into Screaming Frog’s feature. Using the above screenshot as an example, we would be looking at something like this:

Please note that this function is case sensitive.

Tip 3: Find all your empty category pages

If your site is too big and you are having issues with allocated memory on your desktop, you can limit your crawl to only include certain types of URLs (e.g. including only category URLs or excluding product URLs by using the ‘include’ or ‘exclude’ features on the tool).

For example, if your products are placed within a /products/ folder, you could add this regex on the ‘exclude’ feature to exclude these from the crawl: https://www.example.com/products/.* This way Screaming Frog won’t crawl any URLs within that URL path.

You could do something similar by including only URLs under a specific path. For example, if you only want to crawl category pages on a specific folder (e.g. /categories/) you could use the include feature instead by adding the below: https://www.example.com/categories/.*

Other ways in which you can break down your website is by gender (e.g. crawl only /womens/, /mens/ or /kids/) or by department (e.g. /clothing/, /swimwear/, /accessories/ etc.).

Tip 4: Find thin category pages with limited stock

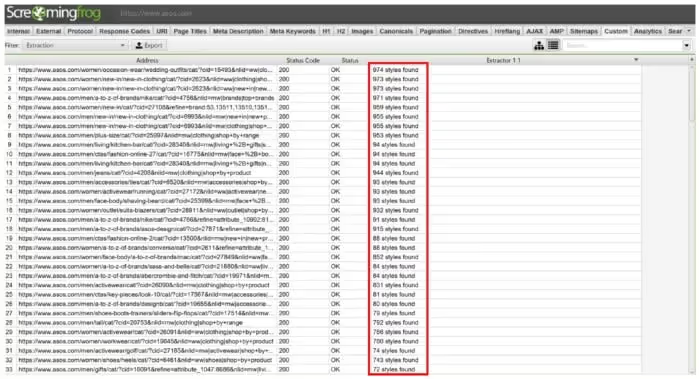

You can use Screaming Frog’s ‘Custom extraction’ tool to find thin category pages with limited stock:

This type of pages can be classified as thin content by search engines and put the site at risk of algorithm penalties.

Furthermore, they are font of a bad user experience (UX), lost sales and therefore revenue loses.

Fetch the contents of the ‘style count’ container of your category page template by typing it in the Screaming Frog’s tool: //*[contains(@class, ‘[enter here the class name]’)]

When the crawl finishes, you’ll get something like this:

Tip 5: Learn XPath for SEO purposes

There are many other elements of a page you can extract with XPath. For eCommerce sites in particular, some useful things you can do are:

- Extracting the content snippets of your category pages so that you can review them and ensure they are optimised, find short content snippets on your site that need to be extended, etc.

- Extract the price of every single product page on your website

- Extracting page titles, meta descriptions or main headings

- Extracting all links in a document (e.g. all products within a category page)

- Get hreflangs, canonical URLs or AMP URLs

If you want to learn how to exploit the power of XPath using commonly available SEO tools, you can check out this SEO guide to XPath .

Thank you Maria! For anyone looking to sign up for more practical tips around SEO and digital and content marketing, you can sign up here, see you at 4:00pm!

You can learn more about our software and how it fits with your SEO strategy.